Clarkson Computer Science PhD Student Richard Turner’s research on using video motion vectors for structure from motion 3D reconstruction was awarded Best Paper at the 19th International Conference on Signal Processing and Multimedia Applications (SIGMAP 2022) held in Lisbon, Portugal. Richard is jointly advised by Dr. Sean Banerjee and Dr. Natasha Banerjee, Associate Professors in Computer Science. SIGMAP brings together international scholars working on theory and practice related to representation, storage, authentication, and communication of multimedia information from images, videos, and audio data as well as emerging sources of multimodal data such as text, social media, and healthcare. Richard’s work plays a critical role in reducing the computational resources needed for 3D scene reconstruction utilizing structure from motion (SfM). SfM is a highly computationally intensive process, typically performed offline with extremely powerful computing devices. 3D scene reconstruction involves using advanced computer vision techniques to create three-dimensional models from a series of two-dimensional images. 3D scene reconstruction is an important pipeline in providing robots and autonomous vehicles with the ability to navigate their environments. There is a growing interest in the research community to perform SfM using low power devices typically found in unmanned aerial and ground vehicles as well as autonomous vehicles.

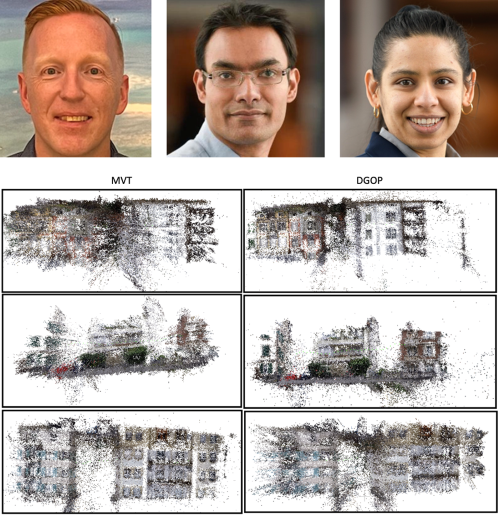

Richard’s approach leverages video motion vectors used for video compression. H.264 video compression has become the prevalent choice for devices which require live video streaming and include mobile phones, laptops and Micro Aerial Vehicles (MAV). H.264 utilizes motion estimation to predict the distance of pixels, grouped together as macroblocks, between two or more video frames. Live video compression using H.264 is ideal as each frame contains much of the information found in previous and future frames. By estimating the motion vector of each macroblock for every frame, significant compression can be obtained. Richard’s approach provides a near real-time feature detection and matching algorithm for SfM reconstruction using the properties of motion estimation found in H.264 video compression encoders. Validation of Richard’s approach was performed with video taken from a MAV flying within an urban environment.

As Senior Principal Software Engineer at Northrup Grumman, Richard works on next generation challenges in space. His research addresses real-time 3D mapping of environments for autonomous navigation. Richard’s work has broader impact in advancing technologies for performing scene reconstruction using low resource devices found in unmanned aerial and ground vehicles for use in challenging scenarios such as disasters or mass casualty events. Richard is currently leading an interdisciplinary team of graduate and undergraduate students to deploy his algorithm to unmanned aerial vehicles designed by the team.

Richard is a member of the Terascale All-sensing Research Studio (TARS) at Clarkson University. TARS supports the research of 15 graduate students and nearly 20 undergraduate students every semester. TARS has one of the largest high-performance computing facilities at Clarkson, with 275,000+ CUDA cores and 4,800+ Tensor cores spread over 50+ GPUs, and 1 petabyte of (nearly full!) storage. TARS houses the Gazebo, a massively dense multi-viewpoint multi-modal markerless motion capture facility for imaging multi-person interactions containing 192 226FPS high-speed cameras, 16 Microsoft Azure Kinect RGB-D sensors, 12 Sierra Olympic Viento-G thermal cameras, and 16 surface electromyography (sEMG) sensors, and the Cube, a single- and two-person 3D imaging facility containing 4 high-speed cameras, 4 RGB-D sensors, and 5 thermal cameras. TARS performs research on using deep learning to glean understanding on natural multi-person interactions from massive datasets, in order to enable next-generation technologies, e.g., intelligent agents and robots, to seamlessly integrate into future human environments.