Terascale All-sensing Research Studio (TARS) PhD student Nikolas Lamb will be presenting his accepted paper at the 2022 European Conference on Computer Vision (ECCV), one of the top three highest ranking venues for research in computer vision.

Lamb is advised on his research on repairing damaged objects by Dr. Natasha Banerjee and Dr. Sean Banerjee, Associate Professors in the Department of Computer Science and co-directors of TARS. Lamb’s paper will be published in the proceedings of the conference. Lamb’s paper is the first from Clarkson to be published at ECCV, a venue dominated by researchers from large technology companies such as Amazon, Google, Meta/Facebook, Microsoft, Adobe, and Apple, and top-tier research institutions such as Harvard, Stanford, MIT, Columbia, Yale, Princeton, UC Berkeley, Carnegie Mellon, Oxford, Cambridge, and Max Planck Institute to name a few.

Given the rapidity with which knowledge changes in computer science, conferences are the standard for immediate information dissemination, and as such are peer-reviewed and held to similar standing as journals in other fields. ECCV is globally known as one of the three highest-ranking peer-reviewed venues in computer vision, and is held once every two years, making it one of the toughest venues for computer vision to be published in.

As noted on Google Scholar, ECCV has an h5-index of 186 and ranks third among computer vision conferences in h5-index, the other two being Conference on Computer Vision and Pattern Recognition (CVPR) and International Conference on Computer Vision (ICCV). The conference also demonstrates the ubiquitous scientific impact of computer vision, in that it is currently the 40th ranked publication venue (conference and/or journal) overall in h5-index, and 15th in Engineering & Computer Science. Lamb is one of few ECCV attendees who have been awarded a Student Grant by ECCV that covers his registration and travel to the conference from October 23-27, 2022.

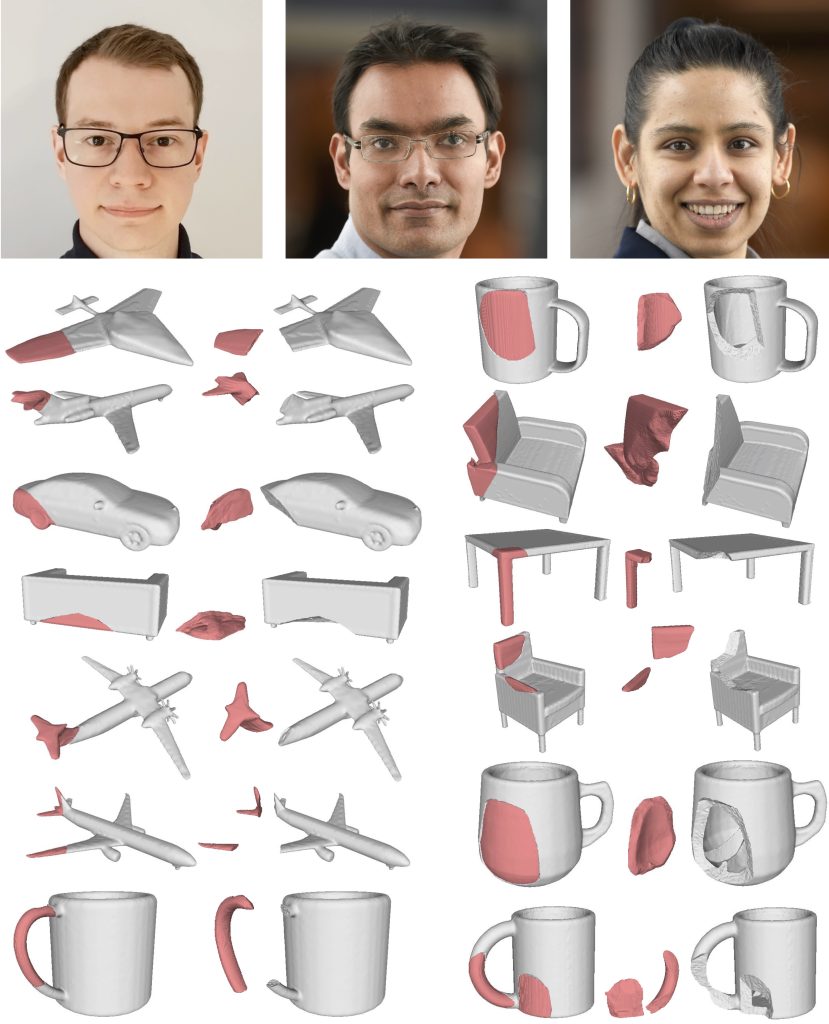

In July 2022, Lamb presented MendNet, a then state-of-the-art method to repair damaged objects at the Symposium on Geometry Processing by using deep neural networks to represent the structure of damaged, complete, and repaired objects. Just a few months later, Lamb’s ECCV paper has contributed a new algorithm, DeepMend, that overcomes the limitations of his prior work, by tying a mathematical representation of occupancy of damaged and repaired objects to complete objects and the fracture surface, enabling a compact representation of shape via deep networks and establishing a new state-of-the-art.

Lamb’s rapid and — even now ongoing — release of new state-of-the-art algorithms is in line with the accelerated rate of research in computer science. As Alexei Efros, winner of the Association for Computing Machinery (ACM) Prize in Computing and Computer Science Professor at the University of California, Berkeley, says “The half-life of knowledge in computer science is quite short. In machine learning, it is about three months.”

Lamb’s research brings repair of damaged objects within the hands of the average consumer, enabling us to move closer to sustainable use of items. It also bridges the gap between research in material science and computer science by tying artificial intelligence to the definition of damaged object geometry, enabling in-the-wild repair. By using deep learning to hypothesize what a repair part should look like, Lamb’s work also contributes to the restoration of objects of cultural heritage and items of personal significance, for instance, a precious piece of pottery.

TARS, of which Lamb is a member, conducts research on making next-generation artificial intelligence and robotic systems human-aware. Research at TARS spans fields such as computer vision, computer graphics, human-computer interaction, robotics, virtual reality, and computational fabrication. TARS supports the research of 15 graduate students and nearly 20 undergraduate students every semester. TARS has one of the largest high-performance computing facilities at Clarkson, with 275,000+ CUDA cores and 4,800+ Tensor cores spread over 50+ GPUs, and 1 petabyte of (nearly full!) storage. TARS houses the Gazebo, a massively dense multi-viewpoint multi-modal markerless motion capture facility for imaging multi-person interactions containing 192 226FPS high-speed cameras, 16 Microsoft Azure Kinect RGB-D sensors, 12 Sierra Olympic Viento-G thermal cameras, and 16 surface electromyography (sEMG) sensors, and the Cube, a single- and two-person 3D imaging facility containing 4 high-speed cameras, 4 RGB-D sensors, and 5 thermal cameras. The team thanks the Office of Information Technology for providing access to the ACRES GPU node with 4 V100s containing 20,480 CUDA cores and 2,560 Tensor cores.